Product rule

| Topics in Calculus | ||||||||

|---|---|---|---|---|---|---|---|---|

| Fundamental theorem Limits of functions Continuity Mean value theorem

|

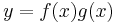

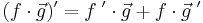

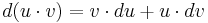

In calculus, the product rule (also called Leibniz's law; see derivation) is a formula used to find the derivatives of products of functions. It may be stated thus:

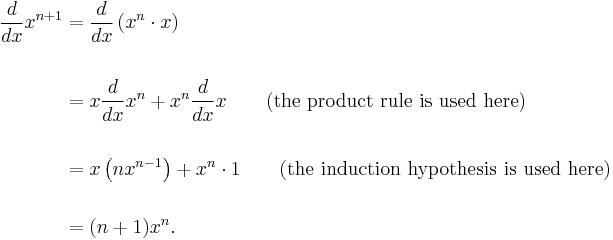

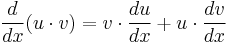

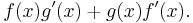

or in the Leibniz notation thus:

.

.

Contents |

Discovery by Leibniz

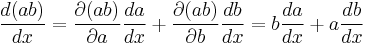

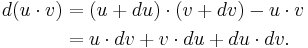

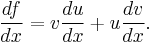

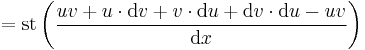

Discovery of this rule is credited to Gottfried Leibniz, who demonstrated it using differentials. Here is Leibniz's argument: Let u(x) and v(x) be two differentiable functions of x. Then the differential of uv is

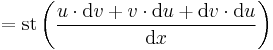

Since the term du·dv is "negligible" (compared to du and dv), Leibniz concluded that

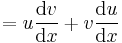

and this is indeed the differential form of the product rule. If we divide through by the differential dx, we obtain

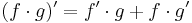

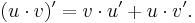

which can also be written in "prime notation" as

Examples

- Suppose one wants to differentiate ƒ(x) = x2 sin(x). By using the product rule, one gets the derivative ƒ '(x) = 2x sin(x) + x2cos(x) (since the derivative of x2 is 2x and the derivative of sin(x) is cos(x)).

- One special case of the product rule is the constant multiple rule which states: if c is a real number and ƒ(x) is a differentiable function, then cƒ(x) is also differentiable, and its derivative is (c × ƒ)'(x) = c × ƒ '(x). This follows from the product rule since the derivative of any constant is zero. This, combined with the sum rule for derivatives, shows that differentiation is linear.

- The rule for integration by parts is derived from the product rule, as is (a weak version of) the quotient rule. (It is a "weak" version in that it does not prove that the quotient is differentiable, but only says what its derivative is if it is differentiable.)

A common error

It is a common error, when studying calculus, to suppose that the derivative of (uv) equals (u ′)(v ′) (Leibniz himself made this error initially);[1] however, there are clear counterexamples to this. For a ƒ(x) whose derivative is ƒ '(x), the function can also be written as ƒ(x) · 1, since 1 is the identity element for multiplication. If the above-mentioned misconception were true, (u′)(v′) would equal zero. This is true because the derivative of a constant (such as 1) is zero and the product of ƒ '(x) · 0 is also zero.

Proof of the product rule

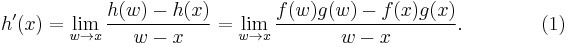

A rigorous proof of the product rule can be given using the properties of limits and the definition of the derivative as a limit of Newton's difference quotient.

If

and ƒ and g are each differentiable at the fixed number x, then

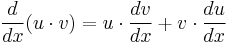

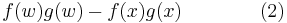

Now the difference

is the area of the big rectangle minus the area of the small rectangle in the illustration.

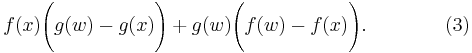

The region between the smaller and larger rectangle can be split into two rectangles, the sum of whose areas is[2]

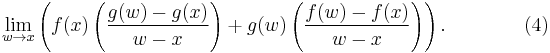

Therefore the expression in (1) is equal to

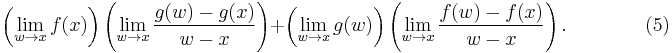

Assuming that all limits used exist, (4) is equal to

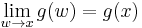

Now

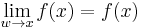

because ƒ(x) remains constant as w → x;

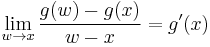

because g is differentiable at x;

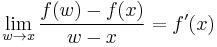

because ƒ is differentiable at x;

and now the "hard" one:

because g, being differentiable, is continuous at x.

We conclude that the expression in (5) is equal to

Alternative proof

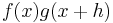

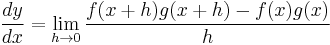

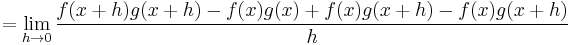

Suppose :

By applying Newton's difference quotient and the limit as h approaches 0, we are able to represent the derivative in the form

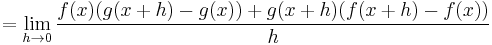

In order to simplify this limit we add and subtract the term  to the numerator, keeping the fraction's value unchanged

to the numerator, keeping the fraction's value unchanged

This allows us to factorise the numerator like so

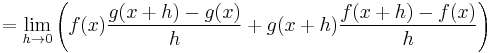

The fraction is split into two

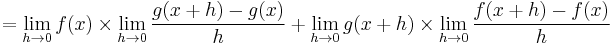

The limit is applied to each term and factor of the limit expression

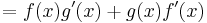

Each limit is evaluated. Taking into consideration the definition of the derivative, the result is

Using logarithms

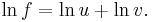

Let f = uv and suppose u and v are positive functions of x. Then

Differentiating both sides:

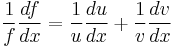

and so, multiplying the left side by f, and the right side by uv,

The proof appears in [1]. Note that since u, v need to be continuous, the assumption on positivity does not diminish the generality.

This proof relies on the chain rule and on the properties of the natural logarithm function, both of which are deeper than the product rule. From one point of view, that is a disadvantage of this proof. On the other hand, the simplicity of the algebra in this proof perhaps makes it easier to understand than a proof using the definition of differentiation directly.

Using the chain rule

The product rule can be considered a special case of the chain rule for several variables.

.

.

Using non-standard analysis

Let u and v be continuous functions in x, and let dx, du and dv be infinitesimals. This gives,

Generalizations

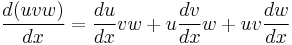

A product of more than two factors

The product rule can be generalized to products of more than two factors. For example, for three factors we have

.

.

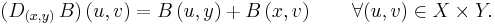

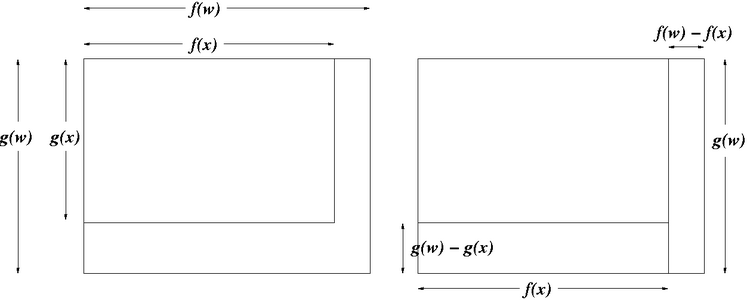

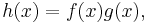

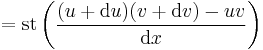

For a collection of functions  , we have

, we have

Higher derivatives

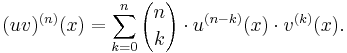

It can also be generalized to the Leibniz rule for the nth derivative of a product of two factors:

See also binomial coefficient and the formally quite similar binomial theorem. See also Leibniz rule (generalized product rule).

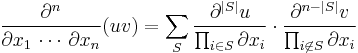

Higher partial derivatives

For partial derivatives, we have

where the index S runs through the whole list of 2n subsets of {1, ..., n}. If this seems hard to understand, consider the case in which n = 3:

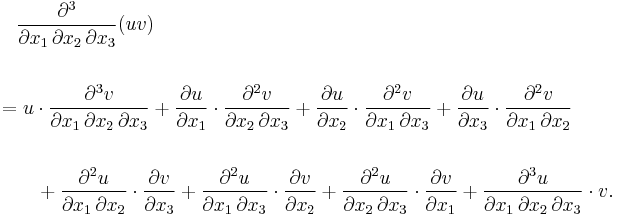

A product rule in Banach spaces

Suppose X, Y, and Z are Banach spaces (which includes Euclidean space) and B : X × Y → Z is a continuous bilinear operator. Then B is differentiable, and its derivative at the point (x,y) in X × Y is the linear map D(x,y)B : X × Y → Z given by

Derivations in abstract algebra

In abstract algebra, the product rule is used to define what is called a derivation, not vice versa.

For vector functions

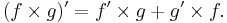

The product rule extends to scalar multiplication, dot products, and cross products of vector functions.

For scalar multiplication:

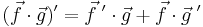

For dot products:

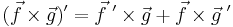

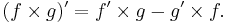

For cross products:

(Beware: since cross products are not commutative, it is not correct to write  But cross products are anticommutative, so it can be written as

But cross products are anticommutative, so it can be written as  )

)

For scalar fields

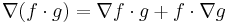

For scalar fields the concept of gradient is the analog of the derivative:

An application

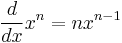

Among the applications of the product rule is a proof that

when n is a positive integer (this rule is true even if n is not positive or is not an integer, but the proof of that must rely on other methods). The proof is by mathematical induction on the exponent n. If n = 0 then xn is constant and nxn − 1 = 0. The rule holds in that case because the derivative of a constant function is 0. If the rule holds for any particular exponent n, then for the next value, n + 1, we have

Therefore if the proposition is true of n, it is true also of n + 1.

See also

- general Leibniz rule

- Reciprocal rule

- Differential (calculus)

- Derivation (abstract algebra)

- Product Rule Practice Problems [Kouba, University of California: Davis]

References

- ↑ Michelle Cirillo (August 2007). "Humanizing Calculus". The Mathematics Teacher 101 (1): 23–27. http://www.nctm.org/uploadedFiles/Articles_and_Journals/Mathematics_Teacher/Humanizing%20Calculus.pdf.

- ↑ The illustration disagrees with some special cases, since – in actuality – ƒ(w) need not be greater than ƒ(x) and g(w) need not be greater than g(x). Nonetheless, the equality of (2) and (3) is easily checked by algebra.

![\frac{d}{dx} \left [ \prod_{i=1}^k f_i(x) \right ]

= \sum_{i=1}^k \left(\frac{d}{dx} f_i(x) \prod_{j\ne i} f_j(x) \right)

= \left( \prod_{i=1}^k f_i(x) \right) \left( \sum_{i=1}^k \frac{f'_i(x)}{f_i(x)} \right).](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/b954ddf54519171082188c09995249c5.png)